DepthAI RPi HAT

BW1094

The Raspberry Pi HAT Edition allows using the Raspberry Pi you already have and passes through the Pi GPIO so that these are still accessible and usable in your system(s). Its modular cameras allow mounting to your platform where you need them, up to six inches away from the HAT.

- Mounts to Raspberry Pi as a HAT for easy integration

- All Raspberry Pi GPIO still accessible through pass-through header

- Flexible Camera Mounting with 6” flexible flat cables

- Includes three FFC Camera ports

Requirements

- A RaspberryPi with an extended 40-pin GPIO Header.

- Python 3.7

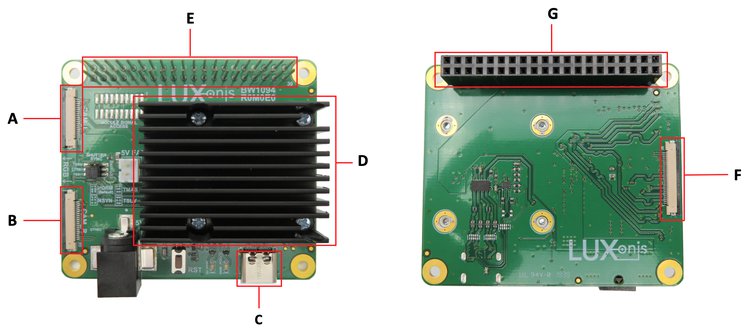

Board Layout

| A. Left Camera Port | E. Pass-through 40-Pin Raspberry Pi Header |

| B. Right Camera Port | F. Color Camera Port |

| C. USB 3.0 Type-C | G. 40-pin Raspberry Pi Header |

| D. DepthAI Module |

What’s in the box?

- DepthAI RPi HAT Carrier Board

- Pre-flashed µSD card loaded with Raspbian 10 and DepthAI

- USB3C cable (6 in.)

Setup

Follow the steps below to setup your DepthAI device.

Power off your Raspberry Pi.

Safely power off your Raspberry Pi and unplug it from power.

Insert the pre-flashed µSD card into your RPi.

The µSD card is pre-configured with Raspbian 10 and DepthAI.

Mount the DepthAI RPi HAT.

Use the included hardware to mount the DepthAI RPi HAT to your Raspberry Pi.

Reconnect your RPi power supply.

Update the DepthAI API.

Upgrade your DepthAI API to the latest.

Calibrate Stereo Cameras.

Have the stereo camera pair? Use the DepthAI calibration script.

Run the DepthAI Python test script.

We’ll execute a DepthAI example Python script to ensure your setup is configured correctly. Follow these steps to test DepthAI:

- Start a terminal session.

- Access your local copy of

depthai.cd [depthai repo] -

Run

python3 depthai_demo.py.

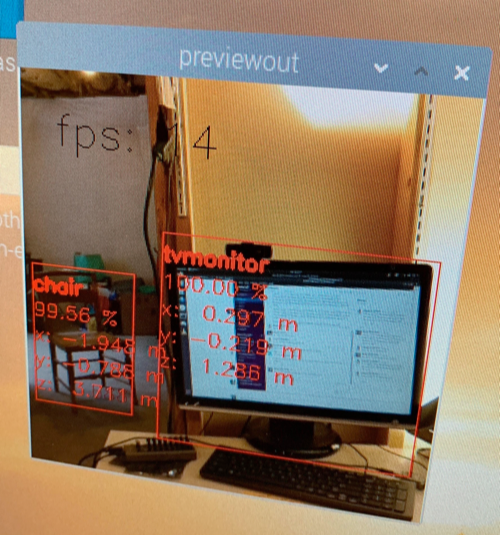

The script launches a window, starts the cameras, and displays a video stream annotated with object localization metadata:

In the screenshot above, DepthAI identified a tv monitor (1.286 m from the camera) and a chair (3.711 m from the camera). See the list of object labels in our pre-trained OpenVINO model tutorial.

Edit on GitHub

Edit on GitHub