DepthAI USB3 | Modular Cameras

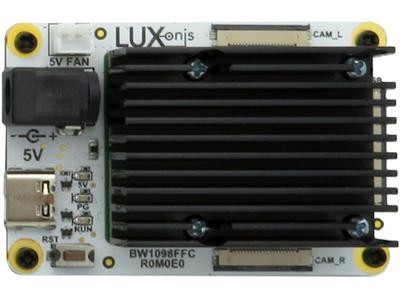

BW1098FFC

Use DepthAI on your existing host. Since the AI/vision processing is done on the Myriad X, a typical desktop could handle tens of DepthAIs plugged in (the effective limit is how many USB ports the host can handle).

Requirements

- Ubuntu 18.04 or Raspbian 10

- Cameras

- Modular color camera

- Stereo camera pair (if depth is required)

- USB3C cable

- USB3C port on the host

- A supported Python version on the host

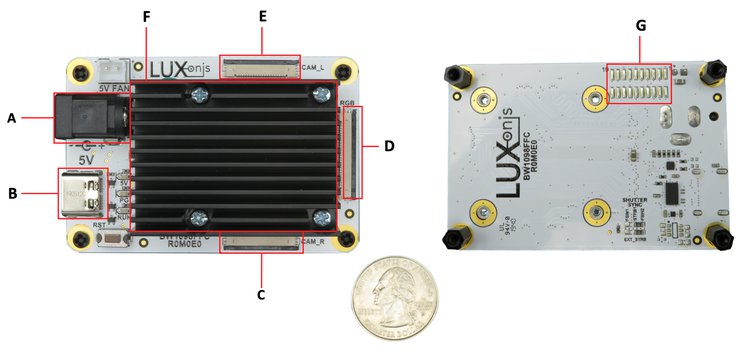

Board Layout

| A. 5 V IN | E. Left Camera Port |

| B. USB3C | F. DepthAI Module |

| C. Right Camera Port | G. Myriad X GPIO Access |

| D. Color Camera Port |

What’s in the box?

-

DepthAI USB3 Modular Cameras Carrier Board - USB3C cable (6 ft.)

- Power Supply

Setup

Follow the steps below to setup your DepthAI device.

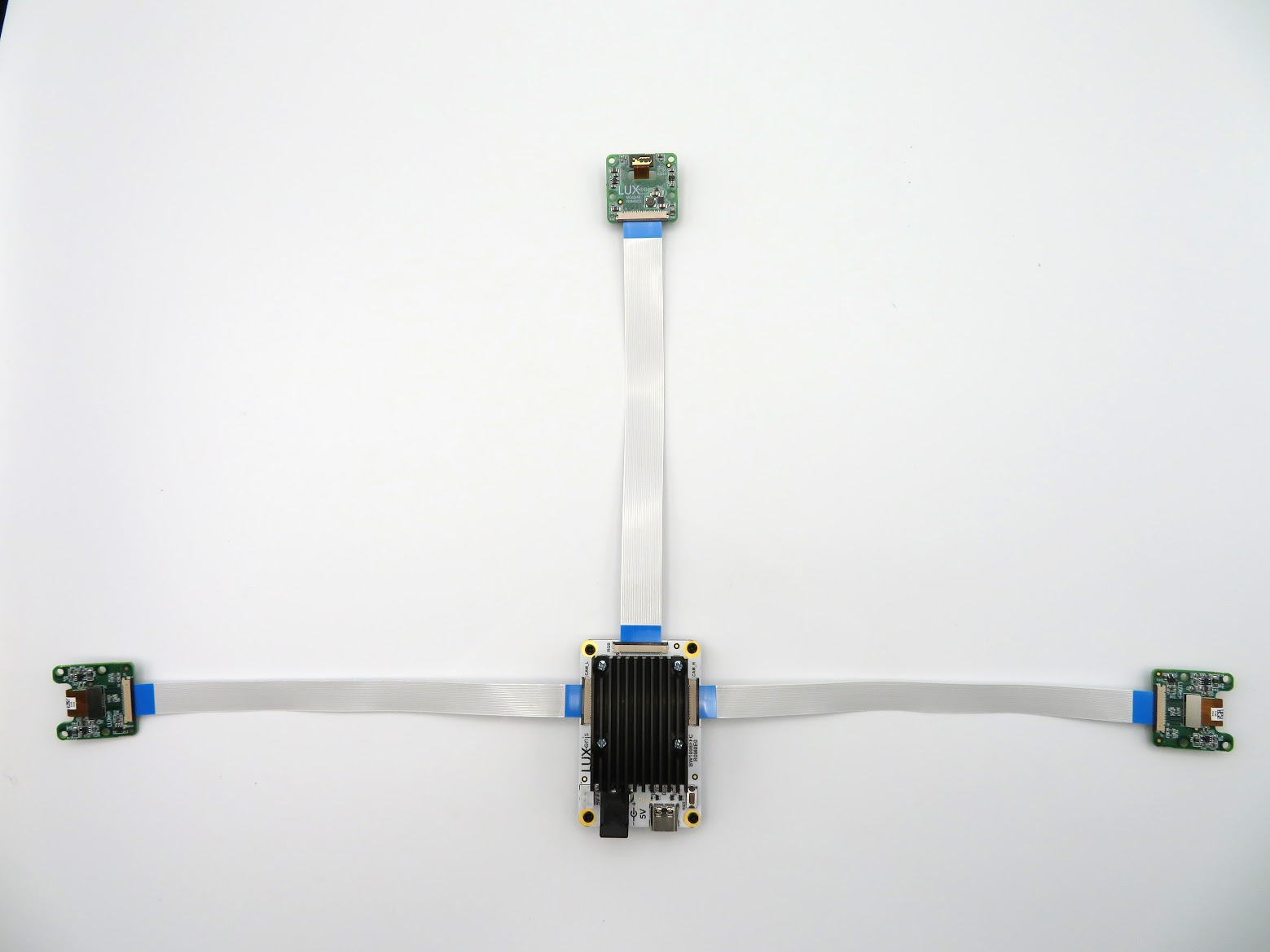

Connect your modular cameras.

The FFC (flexible flat cable) Connectors on the BW1098FFC require care when handling. Once inserted and latched, the connectors are robust, but they are easily susceptible to damage during the de-latching process when handling the connectors, particularly if to much force is applied during this process.

The video below shows a technique without any tool use to safely latch and delatch these connectors.

Once the flexible flat cables are securely latched, you should see something like this:

error

Note when looking at the connectors, the blue stripe should be facing up.

error

Make sure that the FFC cables connect to the camera is on the top side of the final setup to avoid inverted images and wrong swap_left_and_right_cameras setup.

Connect your host to the DepthAI USB carrier board.

Connect the DepthAI USB power supply (included).

Install the Python DepthAI API.

Calibrate Stereo Cameras.

Have the stereo camera pair? Use the DepthAI calibration script.

Download and run DepthAI Python examples.

We’ll execute a DepthAI example Python script to ensure your setup is configured correctly. Follow these steps to test DepthAI:

- Start a terminal session.

- Access your local copy of

depthai.cd [depthai repo] -

Run

python3 depthai_demo.py.

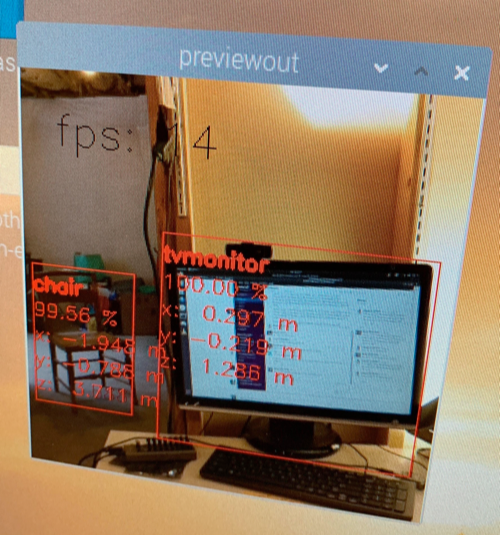

The script launches a window, starts the cameras, and displays a video stream annotated with object localization metadata:

In the screenshot above, DepthAI identified a tv monitor (1.286 m from the camera) and a chair (3.711 m from the camera). See the list of object labels in our pre-trained OpenVINO model tutorial.

Edit on GitHub

Edit on GitHub